DOWNLOAD

Submit the form below to continue your download

SUCCESS

Your download will begin shortly, if it does not start automatically, click the button below to download now.

DownloadWe have a FOAK Problem. Can data be part of the solution?

The journey from concept to commercialization for FOAK projects is challenging, but data-driven strategies can significantly reduce risk.

BIO

Christian Okoye is a Principal at Generate Capital responsible for investments in emerging sustainable infrastructure opportunities.

Prior to Generate, Christian was a Partner at Sidewalk Infrastructure Partners where he focused on transactions advancing virtual power plants. Leading up to his time at Sidewalk, Christian was a director of Venture Investments at Emerson Collective. Here, he focused on managing venture capital and growth equity investments across energy and environmental solutions including waste to energy, distributed energy resource management, and energy efficiency technologies. Christian has also worked for Denham Capital’s Energy Infrastructure platform investing in infrastructure development in emerging markets, and started his career in Goldman Sachs’ Natural Resources group. Christian holds an MBA and MS in Energy and Environmental Resources from Stanford University, and a BA in Economics from the University of Chicago.

This article is from a blog series originally published by Generate’s Christian Okoye along with Caleb Cunningham and Deanna Zhang. The original blogs are available here, here, and here.

Throughout my career in climate tech VC, infrastructure investing, and project development, I’ve focused on commercializing decarbonization technologies. It’s been disheartening, but not surprising, to hear about the continued challenges many first-of-a-kind (FOAK) projects face, especially as the urgency to scale solutions to address climate change grows. Unfortunately, I’ve experienced these challenges first hand too many times. The recent setbacks that have been covered in the press serve as sobering reminders of the complexities involved in turning promising innovations into commercially viable solutions.

- Monolith: Despite securing over $1 billion in government loans and backing large institutional investors, Monolith has faced cash shortages and project delays while scaling its low-emission carbon black and ammonia process.

- Material Evolution: Encountering obstacles while building its first factory for low-carbon cement, including issues with ground stability and securing an adequate electricity supply, delaying progress.

- Fulcrum: Experienced repeated delays and technical setbacks, including issues with nitric acid corrosion and cement-like material buildup in its gasification system. These problems delayed production of sustainable aviation fuel (SAF) eventually leading to shuttering the plant and the company filing bankruptcy.

- Northvolt: Navigating rumors of sabotage and looming bankruptcy following a cancelled BMW contract as the company struggles to ramp up production.

- And many more that have not hit the press but probably will soon.

Yet, venture dollars continue charging head first into these projects while most infrastructure investors stay on the sideline. This divide is not sustainable and more complex than any attempts at silver bullet solutions around more development and concessionary dollars can fix. Stakeholders (concessionary or market-return seeking) cannot accurately underwrite risk that they cannot measure. While we shore up the ecosystem of “non-dilutive” investors (fyi — the ask for infra, govt, and philanthropic dollars to step into FOAK projects does come across as just passing often un-sustainable risk from VC to someone else), we should build real world data and insights to help quantify these scale-up risks and navigate these hurdles to prevent another Cleantech 1.0 scenario and climatetech winter. The data to do this exists and can be unlocked.

Moving from Hype to Reality — Key Takeaways:

- Build Data-Driven Scale-Up Risk Management: FOAK projects must go beyond traditional financial models by leveraging existing but siloed databases and benchmarks from similar scale-up projects to build robust risk profiles. This will lead to both better underwriting of projects where investors can have more conviction and better project planning for entrepreneurs navigating the key scale-up risk.

- Embrace Modularity: Technologies that are designed with scalable, modular components see smoother implementation and reduced risk, allowing for faster adaptation. The very simple idea is to reduce the surface area for human error. The benefits of modularity have been known for a long time in hardware development and have been increasingly embraced by climatetech. There is also good data showing this works and can be quantified.

- Complete Thorough Front-End Loading (FEL): Detailed planning and preparation, especially in data collection and risk assessment, are crucial. Project delivery experts emphasize using Basic Data Protocols to address gaps in technical information early in the process (see attached whitepaper from IPA).

- Crawl, Walk, Run — and don’t skip steps: Massive scale ups are more challenging. Pilots and demos to FOAK with modest scale-ups are more easily digestible for non-dilutive capital participants. Starting with smaller, commercial-scale plants rather than massive megaprojects can reduce risks, allowing companies to resolve technical challenges before scaling up, and those that skip steps by scaling >10x in their core technology fail over and over. Treat it like scaling up Lego blocks (that are ideally modular)

These steps all either improve our ability to understand (and price, and value) the risk, or they reduce the actual level of risk.

Here’s our ask: Partner with us to share insights and data. The FOAK ecosystem can unlock the data and build the partnerships to build the next generation of scale-up risk management and analytic tools that make this work for FOAK project developers and investors. If you know of sources of good data and insights on climate tech project costs, schedules, and performance (eg. Degradation, useful life, uptime), reach out and let us know. We’ve shared some here and below in the spirit of kicking off this partnership.

Part 2: Why Data Insights Matter in Bridging the FOAK Gap

The world of first-of-a-kind (FOAK) projects is one of both enormous potential and considerable risk. These projects, which bring cutting-edge climate technologies to market, face obstacles like project delays, cash shortages, and technical challenges that can derail their success. In a previous blog post, we explored these issues and highlighted how “stakeholders (concessionary or market-return seeking) cannot accurately underwrite risk that they cannot measure.”

While the climate tech world is filled with innovation and promise, many FOAK projects still struggle to secure the infrastructure investment needed to scale. The gap between venture capital and infrastructure financing largely stems from the inability to properly assess, measure, and then allocate project risks. This is where data-driven insights can step in to make a meaningful difference.

This blog explores WHY it is important to measure and assess project risks.

The Power of Data in Risk Assessments

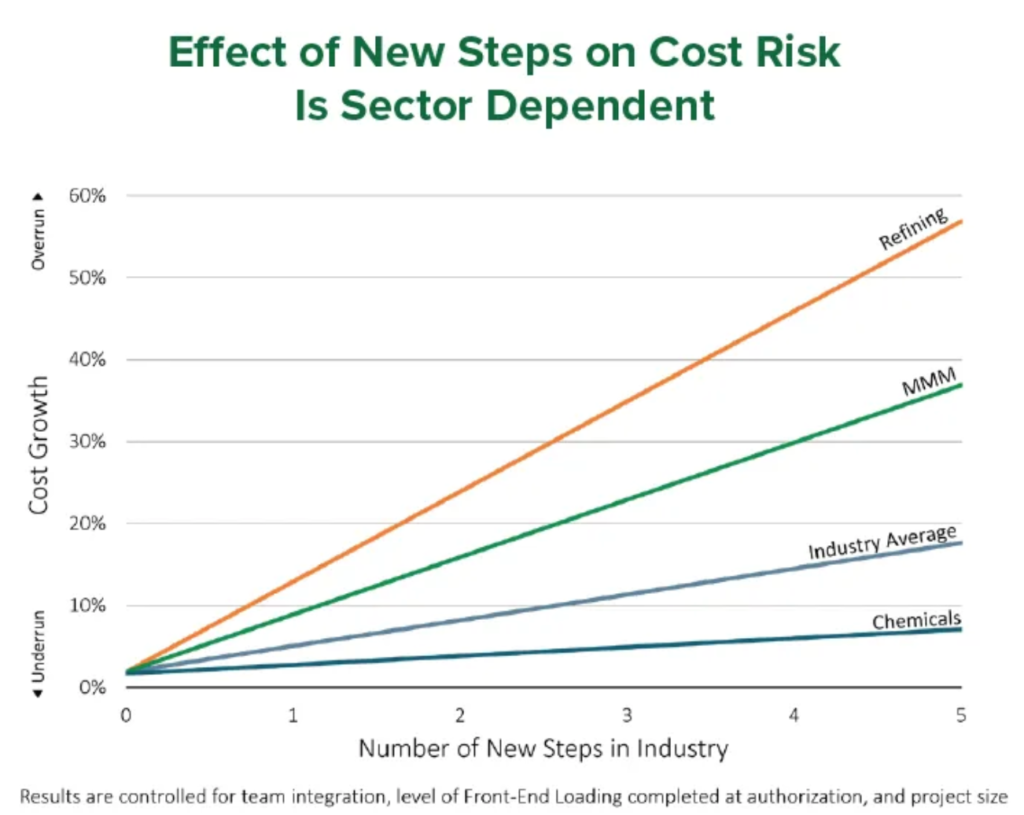

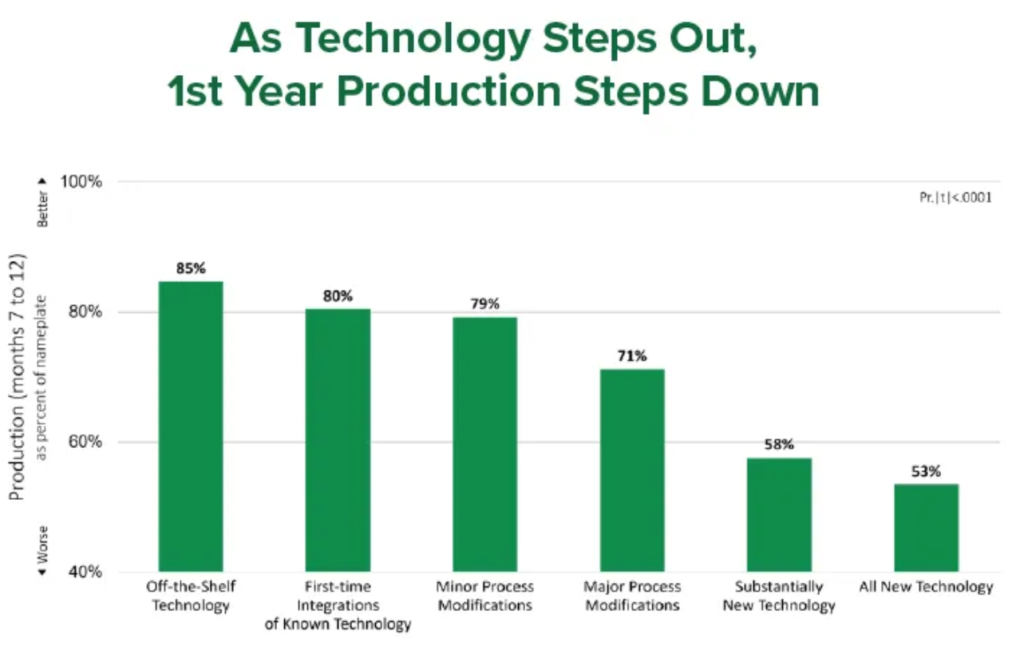

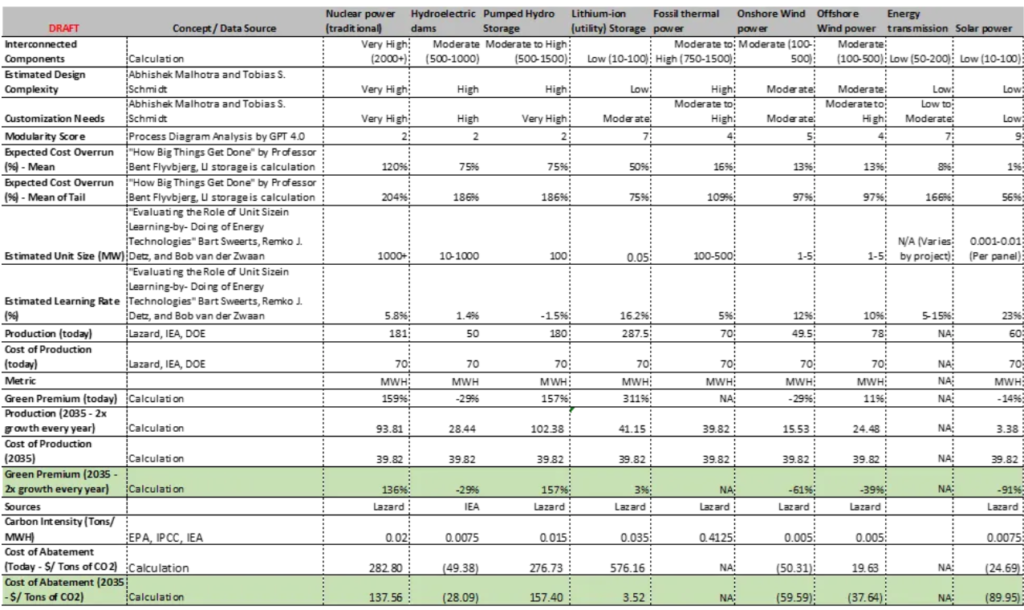

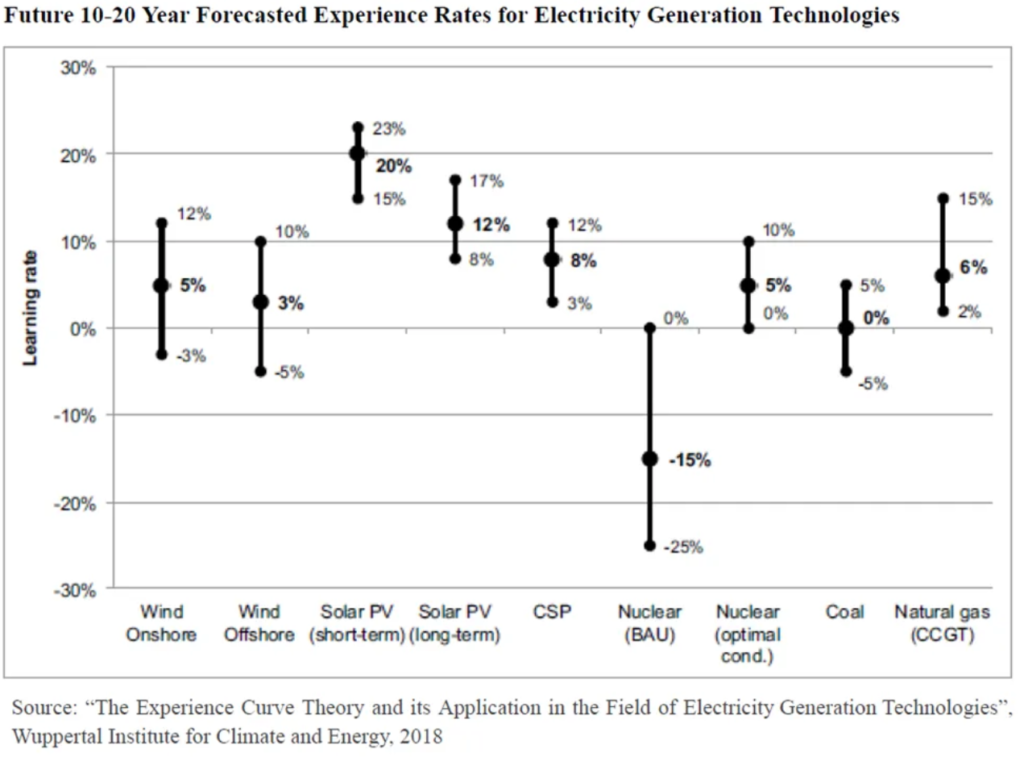

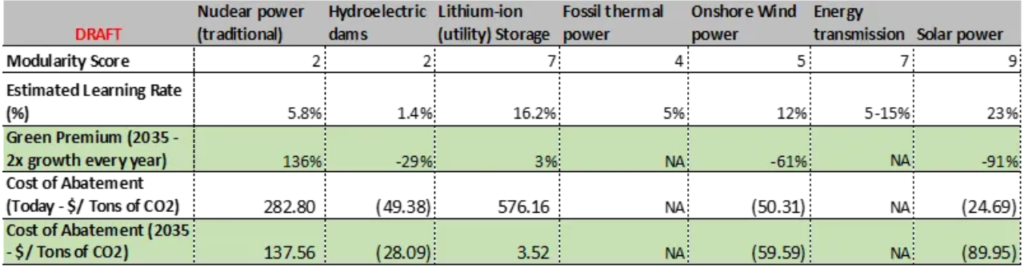

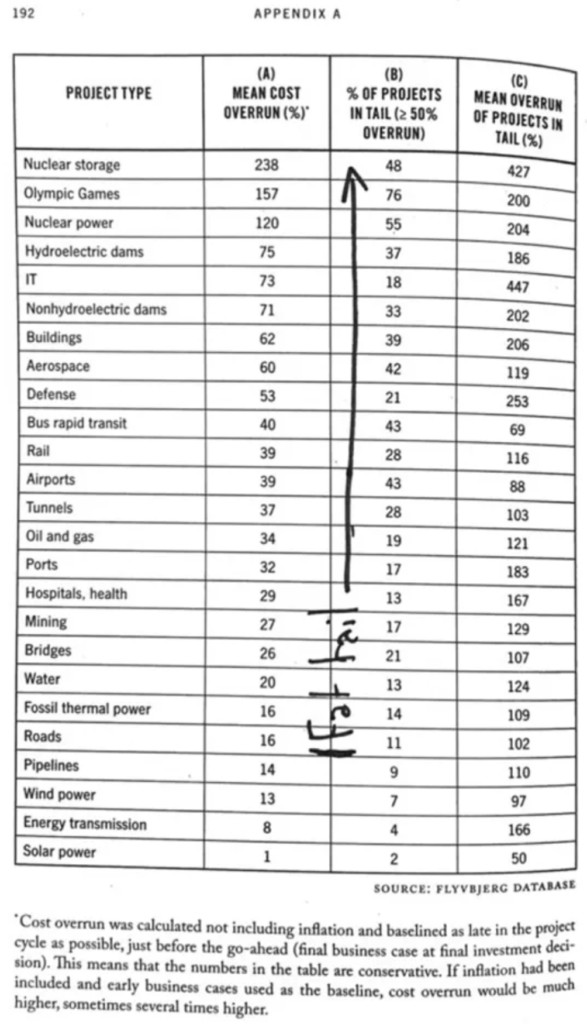

One of the biggest challenges in applying historical insights to FOAK projects is the fragmented nature of available data. Project risks are often assessed based on siloed datasets, which makes it difficult to develop comprehensive risk profiles that could guide investment decisions. However, there is robust data on scaled decarbonization technologies. It’s worth learning from that data and understanding what we can extrapolate to emerging decarbonization technologies. We could start with the chart below:

Note: This chart is in draft form and continues to be a work in progress. The sources, estimates, and calculations are identified.

Correlations and Implications

Upon closer examination of the data, we can observe some strong correlations that have significant implications for climate tech projects:

1. Modularity and Cost Overruns: There’s a notable inverse correlation between modularity and cost overruns. Technologies with higher modularity, such as solar power (9) and energy transmission (7), tend to have lower mean cost overruns (1% and 8% respectively). Conversely, technologies with lower modularity, like nuclear power (2), show much higher mean cost overruns (120%).

2. Modularity and Learning Rates: There’s typically a strong correlation between modularity and learning rates in technology deployment. More modular technologies tend to benefit from faster learning rates, as the product iteration cycle can be faster and improvements can be made and implemented more easily across multiple projects (this is something Tesla and SpaceX have been best in class at to deliver projects in record time even without traditional EPCs). This can lead to quicker cost reductions and performance improvements over time.

These factors and correlations over time also impact a technology’s green premium and cost of abatement underscoring the importance of prioritizing modularity climate tech projects. While some high priority decarbonization technologies (e.g. base load power like nuclear) inherently require more complexity and customization, finding ways to increase modularity could significantly reduce risks and improve learning rates, ultimately accelerating the deployment and cost-effectiveness of climate solutions.

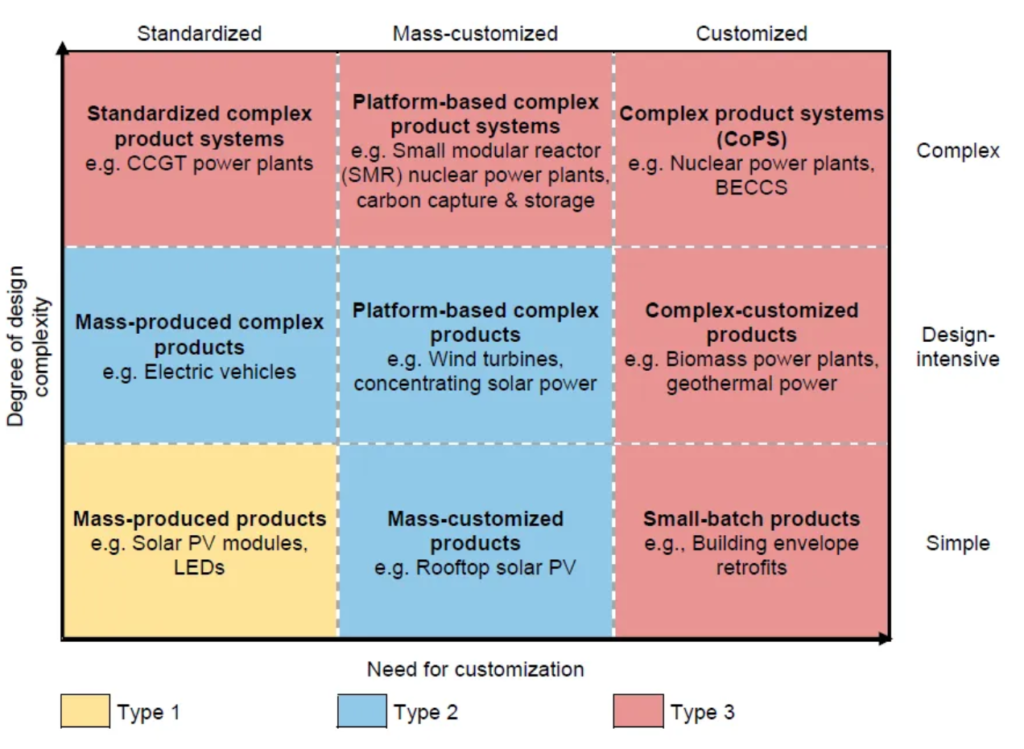

How do we Measure Modularity?

Let’s start by digging deeper into the factors that have made this chart hold true for the decarbonization technologies that have scaled to date.

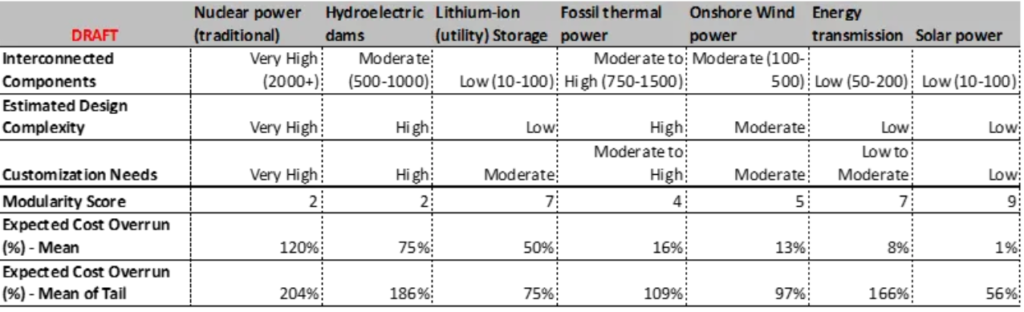

We can assess four key data points that can inform risk assessment in climate tech projects:

1. Estimated Design Complexity

The design complexity of a project can often be gauged by the number of interconnected components it involves. Our data shows a wide range:

- Nuclear power (traditional): Very High (2000+)

- Hydroelectric dams: Moderate (500–1000)

- Solar power: Low (10–100)

This data suggests that technologies like solar power may have lower integration risks compared to more complex systems like nuclear power. These insights can help stakeholders anticipate potential design-related challenges and allocate resources accordingly. The number of interconnected components is a key determinant of design complexity. As the number of components increases, so does the overall complexity of the system design.

2. Customization Needs

The level of customization required for each project type varies significantly:

- Nuclear power: Very High

- Onshore Wind power: Moderate

- Solar power: Low

Higher customization needs often correlate with increased project risks and potential delays. Customization in this context means the degree to which the technology needs to be adapted to its destination environment, in terms of physical environment, regulatory context, or user preferences.

3. Modularity Score

Modularity can significantly impact a project’s flexibility and scalability. Our data estimates a modularity score (higher is better):

- Solar power: 9

- Energy transmission: 7

- Nuclear power: 2

This suggests that solar power projects may offer more flexibility in implementation and scaling compared to nuclear power projects. The modularity score is influenced by both the design complexity and customization needs of a project. Generally, projects with lower design complexity and lower customization needs tend to have higher modularity scores.

4. Historical Cost Overrun

Perhaps one of the most critical data points for investors is the historical cost overrun percentage. We have two metrics:

- Mean Cost Overrun: Ranges from 1% (Solar power) to 120% (Nuclear power)

- Mean of Tail Cost Overrun: Ranges from 56% (Solar Power) to 204% (Nuclear power). This estimates the mean cost overrun among projects with cost overruns >50% — illustrating how “fat tailed” the risk is, that is to say not just how likely a cost overrun is, but the magnitude of impact of that cost overrun.

- These figures provide valuable insights into the financial risks associated with different technologies. Solar power and lithium-ion storage projects have shown remarkably low probability of and magnitude of cost overruns, while nuclear power projects have historically faced significant cost escalations.

Two indicators missing from the chart due to lack of sufficient data, but discussed extensively in the literature on infrastructure project management, are:

1) Schedule Overruns, meaning the period between project green-light and commercial operation; and

2) Performance vs. Projected, meaning the degree to which a project delivers the benefits projected, be they electrons or clean Hydrogen molecules.

Together these three (Cost, Schedule, Performance) comprise the Iron Law of Megaprojects: “Large projects are over budget, over time, and under benefits, over and over again — only 47.9% are on budget, 8.5% are on budget and on time, and 0.5% are on budget, on time, and on benefits.” (Flyvbjerg — “How Big Things Get Done)

Conclusion

The path to scaling FOAK projects is fraught with risk, but with comprehensive data insights on historical scaled decarbonization technology, we can begin to extrapolate insights to quantify and manage these risks in ways that were previously unimaginable. By integrating data on project complexity, design, customization, modularity, and historical performance, we can provide a more comprehensive understanding of project risks, leading to better decision-making for both developers and investors.

As we continue to gather and analyze data from climate tech projects worldwide, our ability to assess and mitigate risks will only improve. This data-driven approach is key to bridging the gap between innovation and infrastructure investment in the climate tech sector.

If you’re involved in FOAK projects or have access to valuable data on project costs, schedules, or performance, we’d love to collaborate and make these projects more investable and successful.

Let’s work together to de-risk the future of climate innovation.

Note: This post is not an indictment against nuclear power which we believe an increasing amount of will be necessary for decarbonization efforts. There are many reasons for the cost overruns in the nuclear sector including policy reacting to public perception of safety and we believe primarily complexity of hardware and lack of standardized design. It is also not an apples to apples values analyses when comparing an investor’s risk adjusted returns for deploying solar and nuclear given the very different socio-economic value they can provide to the grid. This blog is only aiming to uncover the leanings and risk implications from how we’ve historically approached building these decarbonization projects.

Part 3: Data in Action: Project Readiness Level and Lessons in the Real World

In our last two posts, we explored why data insights matter for bridging the gap in First-of-a-Kind (FOAK) projects and examined the fundamental challenges these projects face. The core insight is that the climate tech ecosystem still misprices risk of new technologies when they reach the FOAK stage, but better data can 1) help to accurately assess risk, and 2) help to mitigate risk.

Today, we shift from the “why” and “what” to illustrate the utility of these principles in the real world.

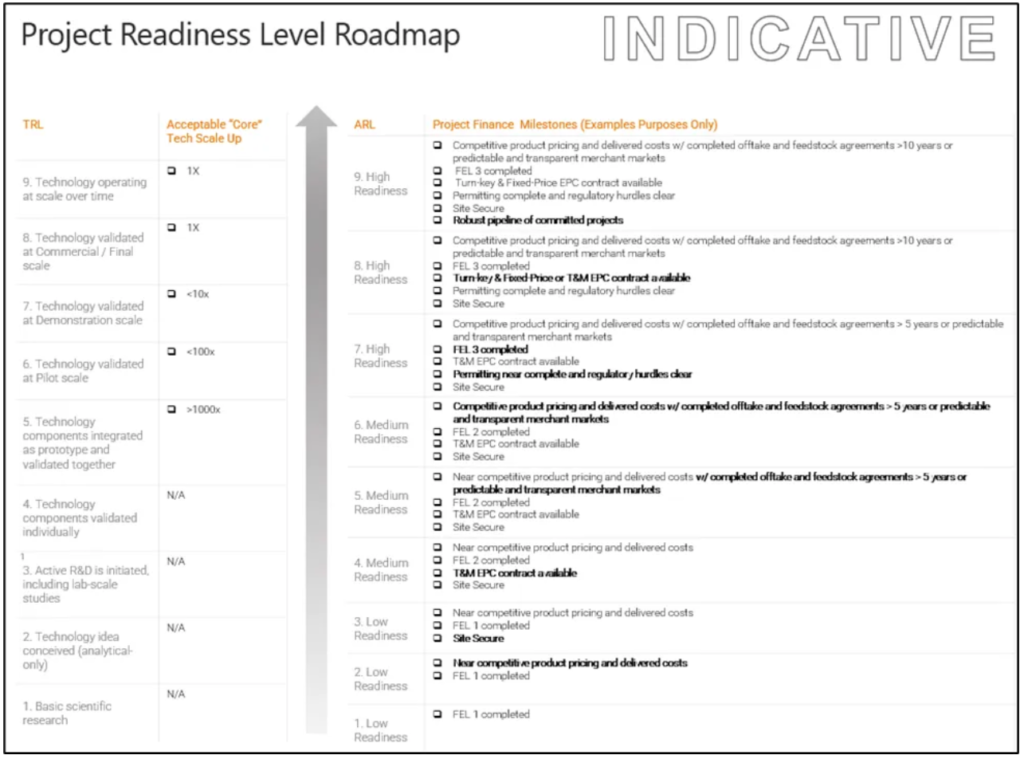

First we introduce how to leverage the principles of our previously discussed data concepts within a Project Readiness Level framework — a work-in-progress — assessing project risk across TRL, ARL, core technology vs. balance of plant, and key development milestones.

Then we share three lessons from real-world cases:

- Lesson 1: Make modularity a core design principle to reap non-obvious rewards beyond just cost (Case from Standard Microgrid)

- Lesson 2: Separate the E from the PC in Engineering, Procurement, and Construction to align incentives and reduce the risk of cost/schedule overruns

- Lesson 3: Consider Pursuing Integration Risk over Technology Risk (Case from H2 Green Steel)

Project Readiness Level

Integrating the various data into a single coherent set of heuristics or “risk scoring” should be the ultimate goal of any data set. We don’t yet have enough data to be able to firmly build such a framework, but as an indicative framing, a “Project Readiness Level” score (incorporating TRL, ARL, and crucial project development milestones) might be a step forward:

Project Readiness Level can help developers and investors alike to consider the various risk centers of a project holistically:

- TRL (Technology Readiness Level), to consider the overall level of maturity of the project

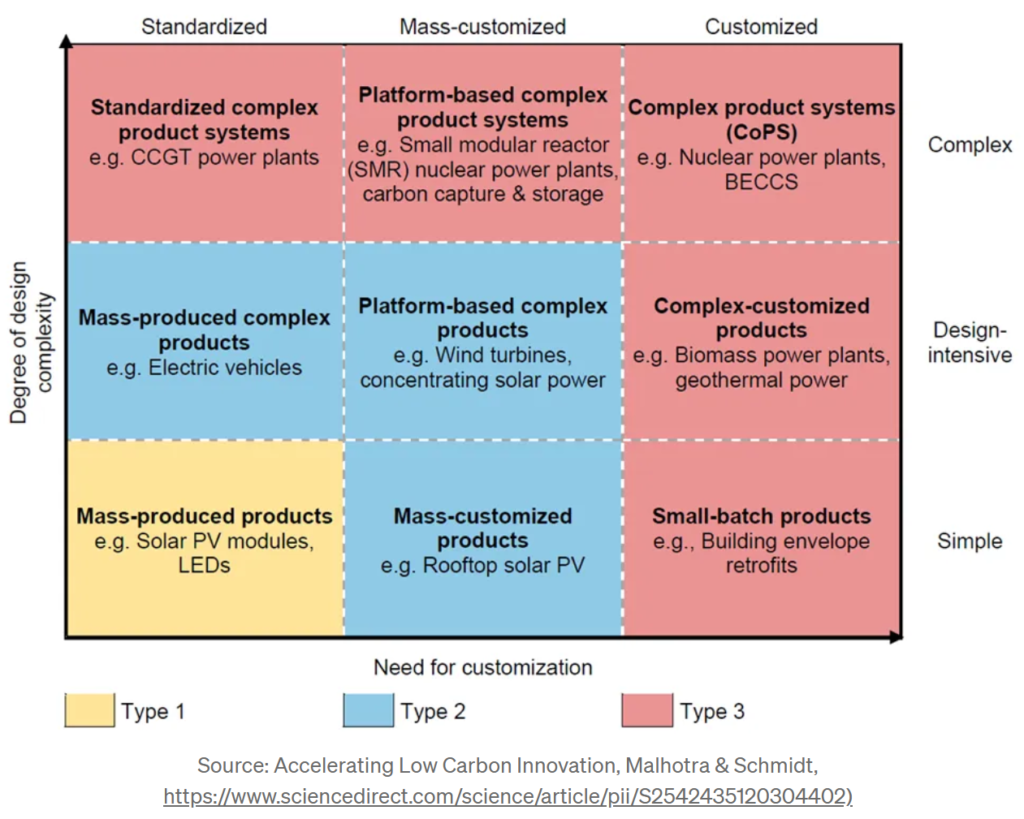

- Projects prior to TRL 9 will likely not yet have fully standardized designs (recall our 3×3 matrix of complexity vs customization in our first blog). Stakeholders should consider this when assessing risk as there will be considerable learning during first time project construction and execution

- Core Technology Scale-Up, to assess the true scale-up risk of the core technology apart from misleading balance-of-plant scale-up and modularity gains otherwise not captured

- Proportion of core-technology to BOP is also important in assessing design complexity and assigning risk mitigants of modularity to a system

- ARL (Adoption Readiness Level), to consider the non-technology risks associated with the project, from value proposition to market acceptance to resource maturity to license to operate

- Project Development Milestones, to consider actual asset-level progress

Note: This focuses on the readiness of a singular FOAK project. Elemental’s Commercial Inflection Point Scale (adapted from TRL and ARENA’s Commercial Readiness Index) presents a broader view of the technology commercialization journey & its bankability.

Within this Project Readiness Framework, data can be put to good use for TRL 7–8 projects where scale-up risk are uncertain with a few specific areas in mind.

For developers:

- Smarter Financial Planning with Historical Cost Overruns: Historical cost overrun data can inform contingency planning and set more realistic budget expectations, particularly for complex projects. Consider Reference Class Forecasting from Professor Bent Flyvbjerg

- Using Precedents in Core Technology to Focus on Balance of Plant Risks: Distinguishing between core technology (e.g., hydrogen electrolyzers, solar modules, nuclear reactors) and the overall project (including balance of plant) is essential in evaluating scale-up risks. Often, if the core technology is highly modular, it can be tested at full commercial scale in lab and pilot environments and then simply repeated (with identical design topology) to scale up a project. (E.g many thermal energy storage technologies: a full commercial project may look like a 100x scale-up but is essentially just 100 identical modules working in concert). In these cases stakeholders should prioritize assessing and managing integration (with balance of plant) risks (addressed further below).

For investors & capital deployment stakeholders

- Incorporating Historical Cost Overruns into Investment Diligence: Historical cost overrun data can be a critical tool for evaluating whether a potential investment’s costs are reasonable. Though most investors looking at emerging infrastructure investments already look at downside scenarios, having actual historical cost overrun data can inform more accurate and precise downside scenario planning (and even encourage more investment in technology areas where the downside is overstated or unknown).

- Using a Modularity Score to Inform More Flexible Resource Allocation: Technologies with higher modularity scores (like solar power) benefit from phased implementation approaches. This allows for more flexible resource allocation and more efficiently recycled construction revolver capital. With a modularity score that can compare technologies, banks should be able to offer more flexible capital for non-solar technologies with a similar modularity score.

Lesson 1: Make modularity a core design principle (Case: Standard Microgrid in African microgrids)

We can assess the impact of modularity as a risk mitigant in a real-world example. One of us (Caleb) has seen its benefits first-hand as a technology developer crossing the FOAK threshold to become a project developer with Standard Microgrid, a company installing solar microgrids in rural Zambian villages. By focusing on modularity, Standard Microgrid brought electricity to places that previously went dark at night.

Standard Microgrid successfully crossed the First-of-a-Kind (FOAK) threshold by using modularity as a central design principle. This approach not only enabled the company to overcome the inherent risks associated with FOAK projects but also made their microgrids more scalable and bankable. By integrating modularity into their designs, Standard Microgrid made each installation a repeatable process. Each successive microgrid deployment benefited from learnings from the last, allowing for continuous improvement in design, execution, and efficiency (AKA “Learning Rate” discussed in the previous blog post). Project costs reduced 30% from pilot to FOAK, and an additional 10% over the first 5 deployments before stabilizing. Project schedules improved a full 40% from FOAK to NOAK. This repeatability didn’t just make projects faster to deploy; it also drove down risk, as unforeseen technical or regulatory challenges encountered in one installation could be proactively addressed in future ones.

The company crossed the critical FOAK threshold by using modularity as a central design principle. This approach mitigated risks and made the microgrids scalable and bankable. By integrating modularity, Standard Microgrid made each installation a repeatable process, where each successive deployment benefited from lessons learned from the previous one. This “Learning Rate” effect led to continuous improvements in design, execution, efficiency and ultimately delivered cost at the project level all the way up through to investor diligence.

The real-world benefits of modularity in Standard Microgrid’s approach included:

- Design: Easier to design systems with reduced manpower, thanks to repeatable templates.

- Procurement: Simplified procurement and logistics management due to standardized equipment needs.

- QA/QC: Easier to maintain consistent quality control when each microgrid was the same, ensuring reliable outcomes.

- SOPs: Standard Operating Procedures (SOPs) could be created for all projects, and improved over time based on consistent project execution.

- Staff Improvement: Installation teams became more skilled with each deployment, as every microgrid followed the same blueprint.

- Training Improvement: Onboarding and training new hires was more efficient with a standardized project structure.

- Financial Modeling: Easier to model the entire portfolio and educate investors when the portfolio consists of 150 iterations of the same 1 project, rather than 150 unique projects.

- Investor Diligence: Easier for investors to diligence a single asset with single design, than to diligence each probable design and project configuration distinctly.

- Permitting and Regulatory: The portfolio received pre-approval from Zambia’s national electricity regulator (the Energy Regulation Board) because the ERB could diligence the modular design once — enabling faster project roll-out across multiple sites.

- Redeployment: any failing project could be redeployed to a different site since each project is identical, materially mitigating the risk of a single nonperforming asset.

By leveraging modularity, Standard Microgrid crossed the FOAK gap and significantly reduced technical, financial, and regulatory risks — and now serves electric power to more than 13,000 people. Modularity proved to be the key factor in making energy infrastructure not only less risky but also more scalable and investable; This approach shows how modularity can turn high-risk projects into scalable, investable ventures.

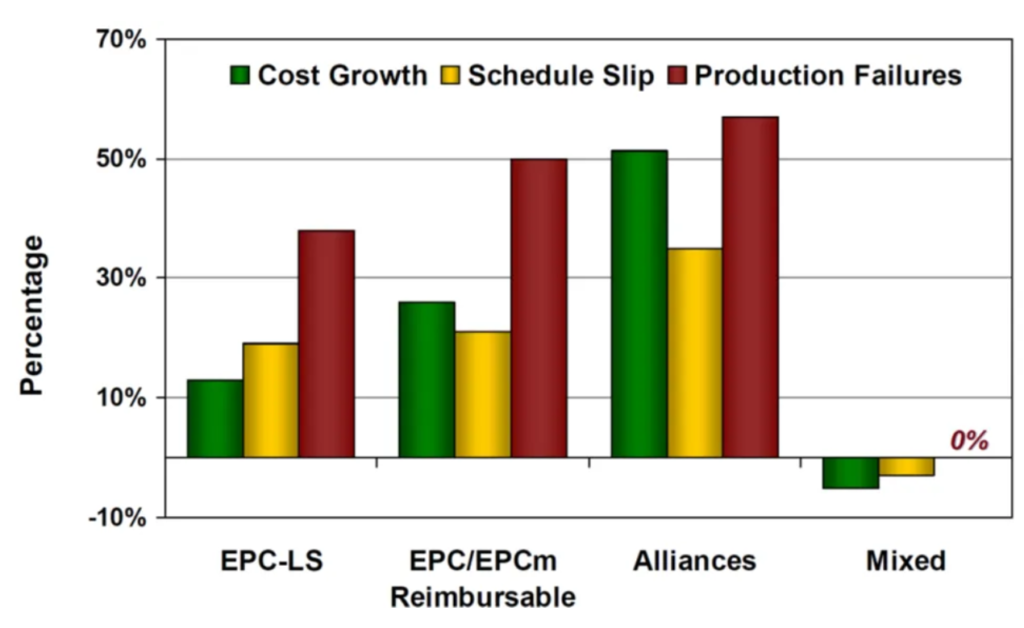

Lesson 2: Separate the E from the P & C

Many investors and project developers in the FOAK space have experienced the cascading challenges of launching construction without a fully designed project. One of us (Christian) has been involved in a project that had an over 3x cost overrun due to incomplete design work. When the design work was finally completed, 30–50% into the construction of the project, they were able to leverage data on work done to date to come up with an accurate budget and schedule (Professor Flyvbjerg has a similar but much larger scale example in the Hong Kong MTR Case in his book How Big Things Get Done). For true FOAK with new inventions, design and scope changes are inevitable. However, completing as much of the design work upfront as possible with a full FEED or FEL3 is best practice to have realistic budget and schedule expectations.

An often-overlooked practice to prevent these cost overruns is to separate the E (Engineering) contracting scope from the P & C (Procurement and Construction) contracting scope.

IPA, a major infrastructure engineering and advisory firm, illustrates in a recent presentation that this “mixed” contracting significantly outperforms (in terms of budget, performance and schedule) lump-sum and time and material (EPCm reimbursable below) contracts done by a single EPC. This may not initially seem intuitive, but IPA’s research shows that a separate contractor providing just the engineering scope is more likely to be incentivized to give an independent and realistic project scope.

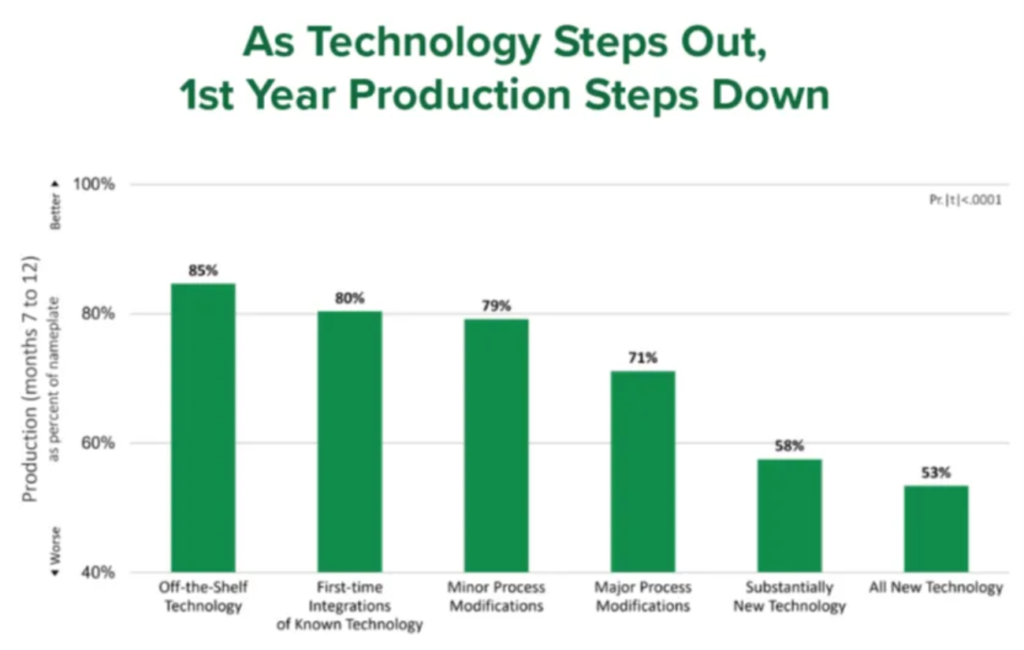

Lesson 3: Consider Pursuing Integration over Invention (Case from H2 Green Steel)

H2 Green Steel (H2GS) has developed a pioneering approach to producing green steel by integrating existing mature technologies in a novel way. Instead of scaling entirely new technologies, H2GS combined proven elements, hydrogen electrolysis and electric arc furnace (EAF) steelmaking, into a single, cohesive system. This approach allowed them to avoid technology risk in favor of integration risk, a different but more manageable challenge.

Challenges:

- While hydrogen-based steelmaking is not yet common, the individual technologies — hydrogen electrolysis, direct reduction of iron (DRI), and EAF — are well-established.

H2GS’s Solution:

- Integration of Existing Technologies: H2GS utilized off-the-shelf components from existing industries (e.g., electrolysis from hydrogen production and EAFs from traditional steelmaking). This helped them avoid the pitfalls of scaling unproven tech, and enabled them to fully fund a 5 billion Euro FOAK facility.

Key Takeaways:

- Leverage Existing Technology to Reduce Risk: By using proven technologies in a new configuration, H2GS avoided the higher costs and uncertainties of scaling entirely new systems.

- Focus on Integration Over Invention: Integration risk can be more easily managed than technology risk, especially when the component technologies are well-understood. This reduces both cost and complexity. However, integration risk even with known technology still has its own challenges and risks that can be assessed and managed. Recall the chart below:

H2 Green Steel’s approach to building Europe’s first large-scale green steel plant demonstrates how innovative companies can successfully commercialize sustainable technologies by carefully managing integration and financial risks, rather than taking on the burden of developing new technologies from scratch.

Conclusion

The journey from concept to commercialization for FOAK projects is challenging, but data-driven strategies can significantly reduce risk. By using frameworks like Project Readiness Level, developers and investors can better manage key risk factors — from technology maturity to integration challenges — enabling more informed decision-making. The real-world examples shared in this post demonstrate how principles like modularity, strategic EPC segmentation, and intelligent integration can transform high-risk projects into scalable, investable ventures.

Standard Microgrid’s modular approach, strategic EPC contracting, and H2 Green Steel’s focus on integration over invention all highlight the importance of aligning design, execution, and finance through thoughtful planning and data insights. These lessons illustrate that while FOAK projects are inherently risky, strategic use of data can not only bridge the gap but also unlock new pathways for growth, scalability, and sustainability.

As the climate tech sector grows, those who leverage these insights will be better equipped to drive scalable, sustainable solutions.

More insights

What the Senate's reconciliation bill means for clean power

The bottom line is that the Senate text will lead the U.S. to build less clean power, it will be more expensive, and power prices will rise. The grid will be less reliable, possibly leading to power shortages and blackouts for American households and businesses. There’s still much that we do not know, and the

Read moreTariff Updates: From Chaos to Convention

What We Know: The future of President Trump’s “Liberation Day” tariffs hit a significant obstacle last week. On May 28, the U.S. Court of International Trade (CIT) struck down core pieces of the so-called reciprocal tariffs, ruling that the President had overstepped his authority under the International Emergency Economic Powers Act (IEEPA) of 1977. Some of President

Read moreHouse Tax Bill: From Politics to Practicalities

The tax credit changes themselves have been covered extensively in other outlets (see here, here) so we won’t recap the details here. As the Senate debates the details, there are a few things we know: To put some numbers on this: A May ICF report estimated that U.S. electricity demand will increase 25% by 2030 (ICF). The authors

Read more